Fake it till you make it With OpenAI video

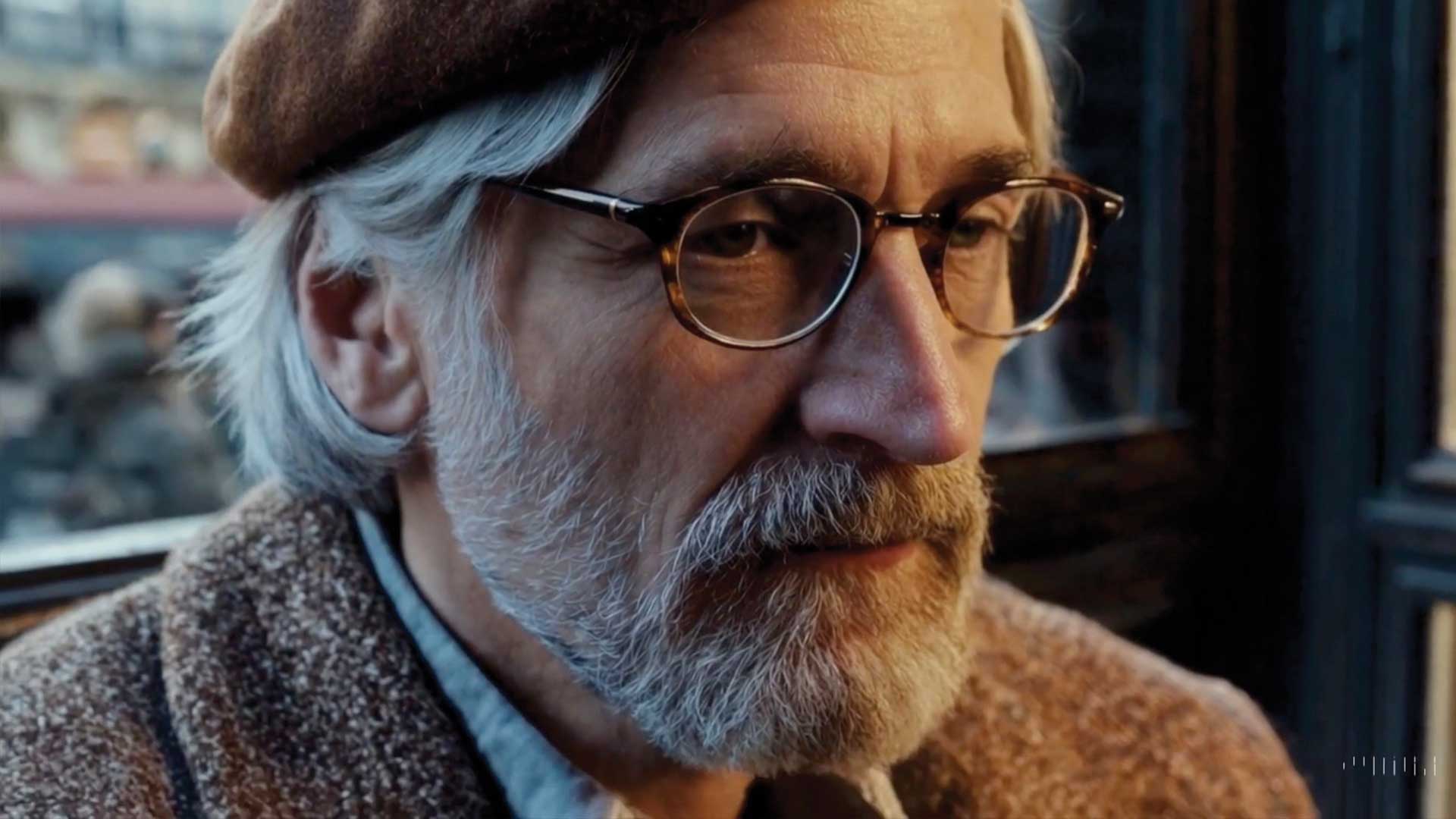

OpenAI are back at it, this time with a text to video model able to crank out highly realistic (or not depending on your preferences) videos that hold up under a casual glance. Watching closely you can still see the usual jank we’ve come to expect from this generation of AI models but the results are still startlingly good, especially for a product in its first iteration.

One problem models have had for creative outputs has been a lack of consistency. This has meant that people have had to develop models for specific faces so they can reapply it to each image. A lack of consistency can be a massive barrier for video, where a character could morph over time. But that doesn’t seem to be a problem for Sora. “Sora can also create multiple shots within a single generated video that accurately persist characters and visual style.”

Some of the outputs that have been shown off so far have been pretty spectacular. Subtle things like camera focal points are well simulated, reflections of people in glass is consistent as lighting conditions change. It’s ability to create a 3D kids scene looking like it’s been pulled from all the worst parts of children’s programming on Youtube is also unparalleled.

Currently the model is available to a selection of creatives and what OpenAI call Red Teamers, experts in areas like misinformation, hateful content, and bias who will be running the model with these topics in mind. No doubt they are going to be doing their best to crank out X-rated videos of celebrities and generating clips of Biden declaring war on sandbags.

The model will have the same safeguards in place to combat this sort of thing though, with text blockers for things like requests of extreme violence, sexual content, hateful imagery, celebrity likeness, or the IP of others. Intellectual property has always been a big concern for OpenAI. It is currently fielding a lawsuit that poses that at least some of the 300 billion words OpenAI scraped off the internet was probably done without permission.

As well as taking highly detailed text inputs “the model is able to take an existing still image and generate a video from it, animating the image’s contents with accuracy and attention to small detail. The model can also take an existing video and extend it or fill in missing frames.”

So brace yourselves for even more 60fps remastered footage from the 1900s, now with an extra unseen half hour of filled in AI steampunk madness.

Text to video isn’t new, but OpenAI’s offering is at a higher resolution than competitors, and being the mainstream option of choice, once OpenAI is doing it then it’s here to stay.

We’ve already seen generated images infest marketing, it’s only a matter of time before documentaries take advantage of this technology too. It might even prove to be a useful tool in the low budget VFX scene. Will we ever be able to believe what we see again?

If Sam Altman has his way and our money, no.